Introduction to Seedance 2.0

ByteDance has launched Seedance 2.0, an advanced AI video generation model that introduces native audio synchronization and 2K resolution output. This model, released in February 2026, is currently available through the Jimeng platform in China. It has quickly gained attention for its potential to reshape creative production in film and content creation.

Key features include reference-based generation capabilities, allowing for greater creative control, and a dual-branch diffusion transformer architecture that processes audio and video in parallel. These innovations position Seedance 2.0 as a strong competitor to existing models like OpenAI’s Sora 2 and Google’s Veo 3.1, marking a shift towards what some are calling “digital cinematography.”

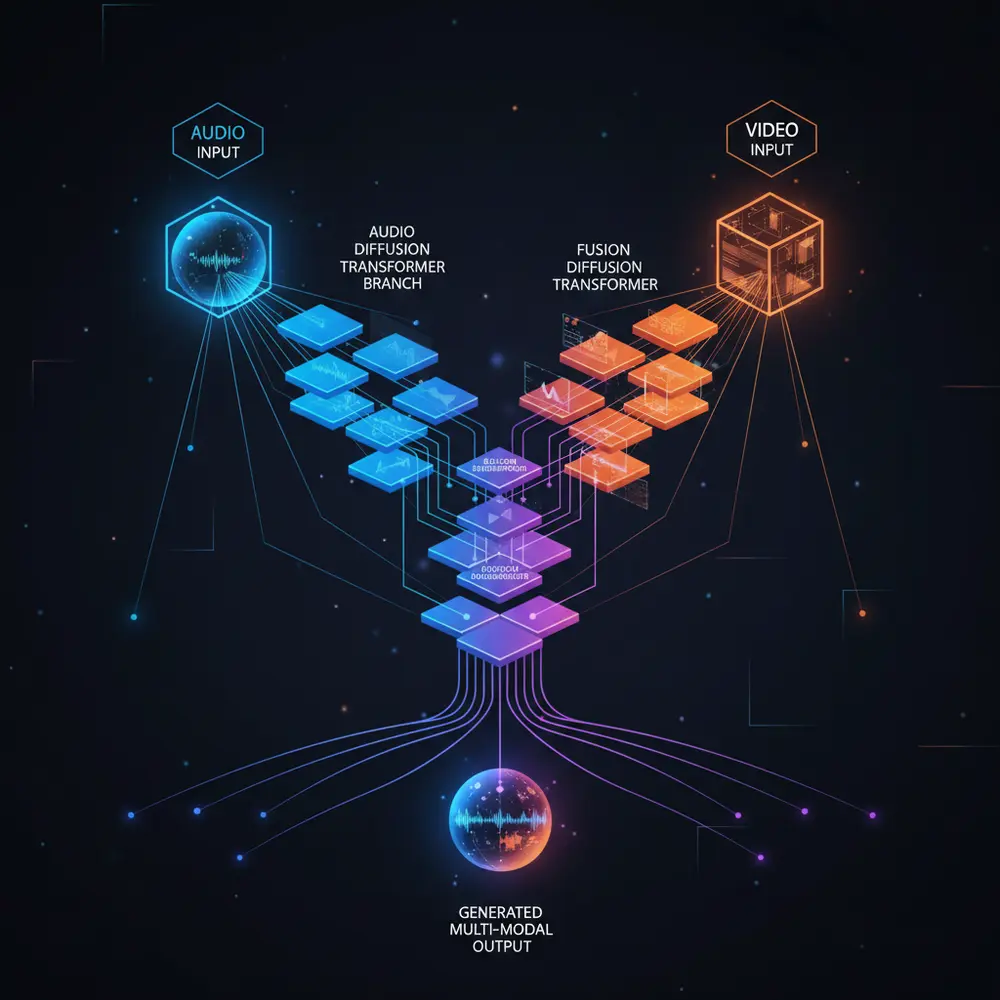

Technical Architecture: Dual-Branch Diffusion Transformer

Seedance 2.0 employs a dual-branch diffusion transformer architecture, representing a significant shift in AI video generation. This architecture allows for simultaneous processing of audio and visual elements, contrasting sharply with traditional methods that generate video first and layer audio afterward. The dual branches operate in parallel, ensuring millisecond synchronization between audio and video outputs.

The model is built on a foundation of 4.5 billion parameters, enabling it to handle long-sequence processing effectively. This capability allows for intricate details and complex narratives to be woven into the generated content, enhancing the overall quality and coherence of the output.

In contrast to sequential post-production workflows, which can be time-consuming and often lead to misalignments, Seedance 2.0’s architecture streamlines the creative process. By integrating audio generation directly into the video creation workflow, it significantly reduces the time needed for editing and synchronization, positioning itself as a robust tool for filmmakers and content creators.

According to ByteDance, this innovative approach not only improves efficiency but also enhances the creative possibilities for users, allowing them to focus more on storytelling than technical adjustments.

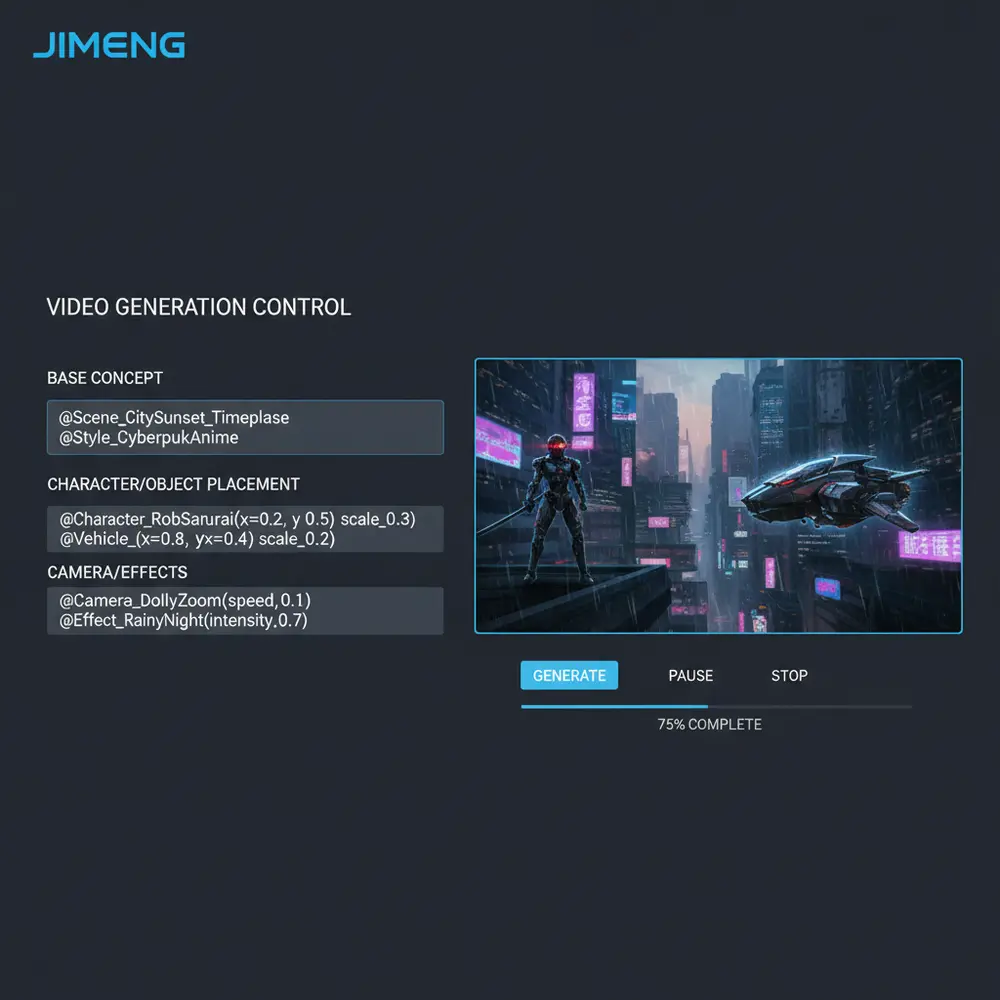

Multimodal Input and the @ Reference System

Seedance 2.0 supports a wide range of inputs, including text, images, video clips, and audio files, enabling users to create complex video content more intuitively. One of the standout features is the @ mention reference system, which allows creators to tag specific elements within their input. This tagging system ensures that the AI accurately interprets and generates content based on user specifications, reducing instances of hallucinations in the output.

The @ reference system works by letting users specify which elements they want to emphasize or incorporate in their video. For instance, a user could input a text prompt like “Create a scene featuring a sunset over a lake @sunset @lake,” guiding the AI to focus on those tagged elements. This capability enhances creative control, making it easier for filmmakers and content creators to achieve their vision.

Moreover, the explicit tagging helps the model understand context and relationships between different inputs, leading to more coherent and contextually relevant outputs. As a result, Seedance 2.0 is positioned to redefine how video content is generated, allowing for a more directed and personalized creative process.

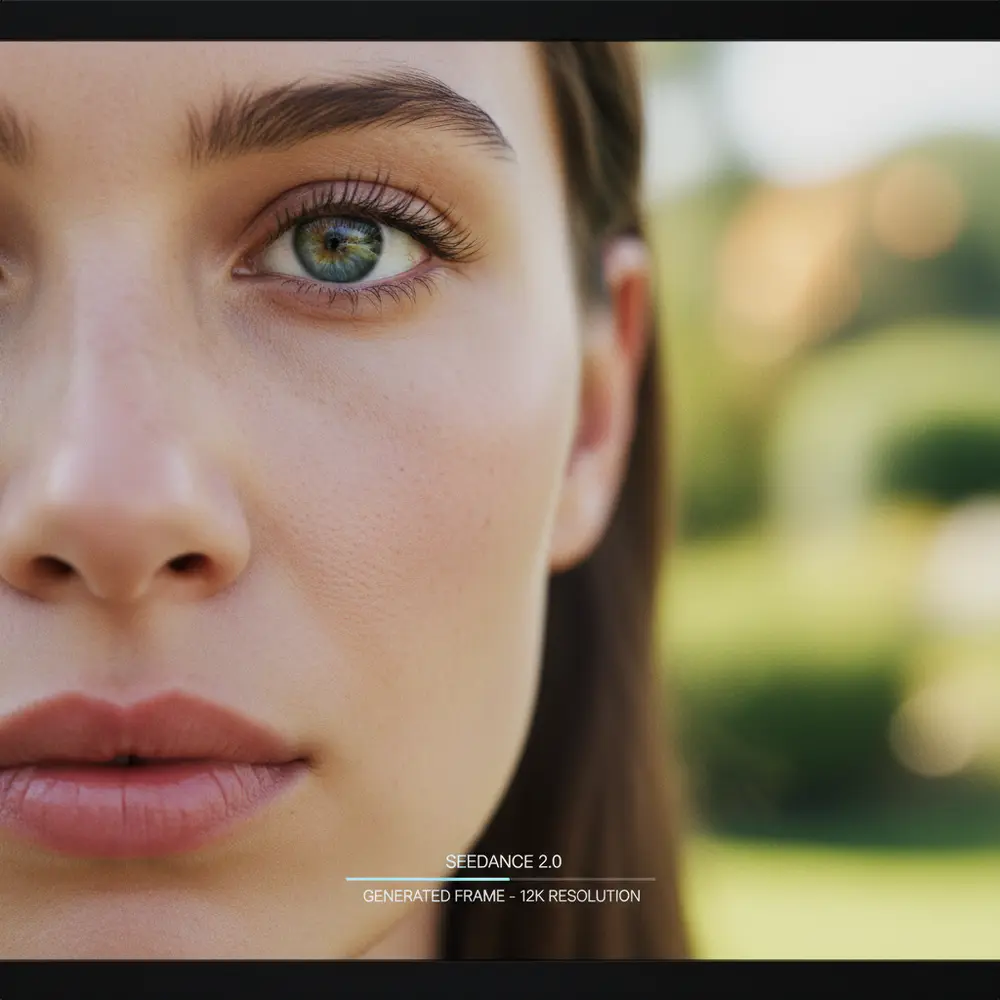

Native 2K Resolution and Output Quality

Seedance 2.0 introduces true 2K resolution output (2560×1440), offering significant advantages over traditional upscaling methods. Unlike upscaling, which can lead to loss of detail, the native generation preserves intricate textures, reflections, and fabric properties, resulting in a more realistic visual experience. This is crucial for industries where detail is paramount, such as fashion, film, and advertising.

The model’s RayFlow optimization allows for rendering speeds that are 30% faster than previous iterations. This improvement not only enhances workflow efficiency but also enables creators to produce high-quality content in less time. The implications for commercial and professional use cases are substantial, as faster render times can lead to increased productivity and lower costs in content production.

Overall, Seedance 2.0’s capabilities position it as a powerful tool for filmmakers and content creators looking to elevate their projects with high-resolution visuals and efficient workflows.

Native Audio-Video Synchronization

Seedance 2.0 introduces a significant advancement in audio-video generation with its native audio-video synchronization capabilities. This model allows for the simultaneous co-generation of sound and visuals, which means audio and video are created in tandem rather than sequentially. This results in millisecond-level alignment, enhancing the overall narrative and emotional impact of the generated content.

The attention bridge technology employed in Seedance 2.0 ensures that audio cues perfectly match visual elements, creating a more immersive experience for viewers. This synchronization contrasts sharply with traditional manual post-production workflows, where audio is often added after the visual content is completed. Such workflows can lead to misalignments and a less cohesive final product.

By integrating audio and video generation, Seedance 2.0 not only streamlines the production process but also elevates the quality of creative outputs. Filmmakers and content creators can now focus on storytelling without the technical challenges of syncing audio and visuals in post-production.

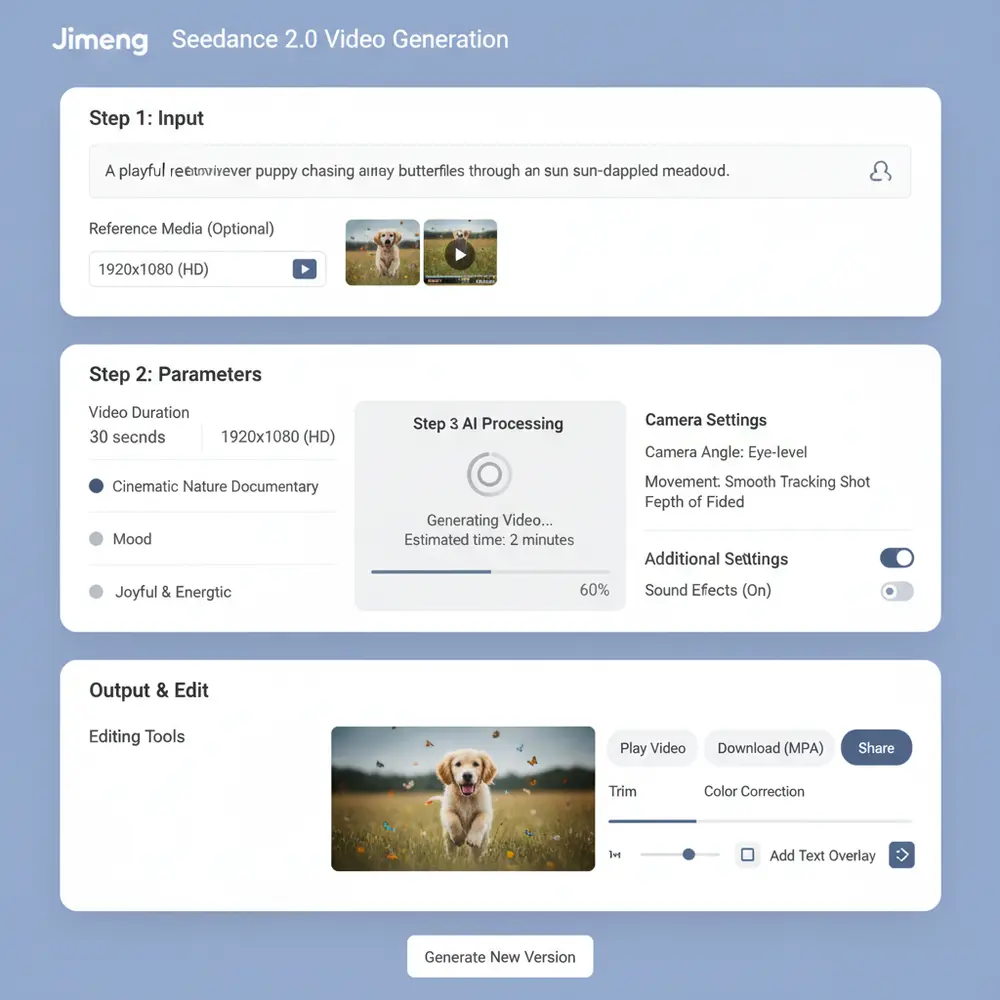

Practical Workflow: Using Seedance 2.0 on Jimeng

Using Seedance 2.0 on the Jimeng platform requires a structured approach to harness its full potential. Here’s how to effectively navigate the process.

Accessing the Model

To access Seedance 2.0, users must log into the Jimeng platform, where the model is currently available. Registration and a basic understanding of the interface are necessary for optimal use.

Preparing and Uploading Multimodal Assets

Users should prepare their multimodal assets, including video clips, audio files, and images, before uploading them to the platform. Ensuring compatibility with the model’s requirements is crucial for smooth operation.

Writing @ Reference Prompts for Precise Control

For precise control over the generated content, users can employ @ reference prompts. These prompts guide the model in aligning the audio and visual elements according to user specifications, enhancing creative output.

Tips for Iterative Refinement and Versioning

We recommend utilizing iterative refinement techniques. After generating initial outputs, users can make adjustments to prompts and settings, allowing for versioning that captures evolving creative visions.

Common Pitfalls and Troubleshooting

Common pitfalls include mismatched asset formats and insufficient prompt specificity. Users should double-check compatibility and clarity in their prompts to avoid errors. The Jimeng platform also provides troubleshooting resources to assist users.

In summary, Seedance 2.0 on Jimeng offers a comprehensive workflow for video generation. By following these guidelines, users can maximize their creative potential and navigate the platform effectively.

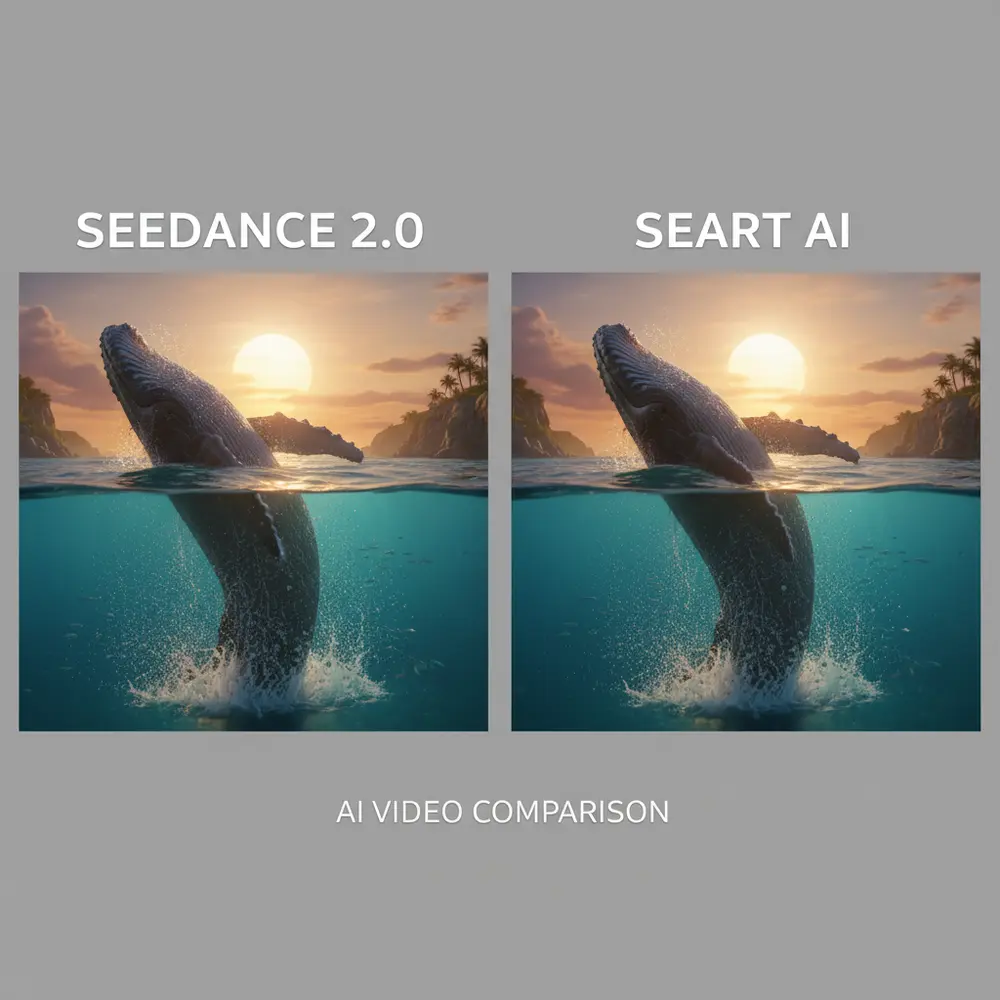

Comparison: Seedance 2.0 vs SeaArt AI

Comparison: Seedance 2.0 vs SeaArt AI

Seedance 2.0 and SeaArt AI represent two distinct approaches to AI video generation, each with unique strengths. Here’s how they stack up against each other in key areas:

-

Resolution and Output Quality: Seedance 2.0 offers 2K resolution output, significantly enhancing visual fidelity compared to SeaArt AI, which primarily operates at lower resolutions. This difference is crucial for content creators seeking high-quality visuals.

-

Audio-Video Synchronization: A standout feature of Seedance 2.0 is its native audio-video synchronization, allowing for seamless integration of sound and visuals during generation. In contrast, SeaArt AI typically requires post-production adjustments for audio alignment, making Seedance 2.0 more efficient for rapid content creation.

-

Multimodal Input Support: Seedance 2.0 supports multimodal inputs, including video references and audio cues, enabling a more nuanced creative process. SeaArt AI, while capable, lacks the same level of reference tagging, limiting its flexibility in complex projects.

-

Performance Benchmarks and Availability: Seedance 2.0 was launched in February 2026 and is currently available through the Jimeng platform in China. Early performance benchmarks indicate a faster generation speed compared to SeaArt AI, which is still in the process of scaling its capabilities.

-

Use Case Suitability and Pricing Models: Seedance 2.0 is well-suited for filmmakers and marketers looking for high-quality, synchronized output, while SeaArt AI may appeal more to casual users due to its lower pricing model.

Overall, the choice between Seedance 2.0 and SeaArt AI will depend on specific project needs and budget considerations.

Key Use Cases and Applications

Seedance 2.0 offers a range of applications across various sectors, enhancing creative processes and output quality. Here are some key use cases:

Film and Cinema Previsualization

Filmmakers can leverage Seedance 2.0 for previsualization, allowing directors to visualize scenes and refine narratives before actual shooting. This capability streamlines production workflows and enhances creative decision-making.

Commercial Advertising and Branded Content

Brands can utilize Seedance 2.0 to create high-quality advertisements and branded content efficiently. The model’s audio-video synchronization feature allows for compelling storytelling that resonates with target audiences.

Social Media and Short-Form Video Creation

Content creators on platforms like TikTok and Instagram can use Seedance 2.0 to produce engaging short-form videos. The model’s rapid generation capabilities enable quick turnaround times, catering to the fast-paced nature of social media.

Virtual Events and Educational Video Production

In the realm of virtual events and education, Seedance 2.0 facilitates the creation of informative and entertaining videos. Its integrated audio generation supports clear communication, enhancing viewer engagement.

Future Outlook and Industry Considerations

Future Outlook and Industry Considerations

Seedance 2.0 is poised for a broader global release, with expectations for integration across various platforms. As ByteDance continues to enhance its capabilities, potential feature roadmaps may include improved user interfaces and expanded customization options for creators.

Competitive Landscape

In an increasingly crowded market, Seedance 2.0 faces competition from established players like OpenAI’s Sora 2 and Google’s Veo 3.1. As these companies continue to innovate, we anticipate the emergence of new rivals aiming to capture market share in AI-driven content creation.

Ethical Considerations

With the rise of advanced AI-generated content, ethical concerns regarding content authenticity and ownership are becoming more pronounced. We’re watching how ByteDance addresses these issues, particularly as creators and audiences demand transparency in AI-generated works.

Conclusion

The advancements represented by Seedance 2.0 could redefine the landscape of content creation, but the industry must navigate the challenges of integration and ethics. Our take is that while the technology is promising, its success will depend on how effectively it balances innovation with responsible practices.

Conclusion and Best Practices

Seedance 2.0 introduces several standout features, including native audio-video synchronization and 2K resolution output, making it a significant advancement in AI video generation. The model’s dual-branch diffusion architecture allows for simultaneous co-generation of audio and visuals, enhancing creative control for users.

Best Practices for Effective Video Generation

To maximize the capabilities of Seedance 2.0, users should focus on providing clear reference inputs, experimenting with different creative styles, and utilizing the integrated audio features to create cohesive narratives.

Next Steps for Readers

For those interested in exploring Seedance 2.0 further, we recommend engaging with available tutorials and community forums to share insights and learn from other creators. Staying updated on future releases and enhancements will also be crucial as this technology evolves.